Third generation NeuPro processor architecture is released by Ceva

Intended for AI and machine learning inference workloads in automotive, industrial, 5G networks and handsets, surveillance cameras and edge computing, the NeuPro-M is the latest generation processor architecture from Ceva.

The self-contained heterogeneous architecture is composed of multiple specialised co-processors and configurable hardware accelerators that seamlessly and simultaneously process diverse workloads of deep neural networks, boosting performance by a factor of five to 15, compared to its predecessor, reports the company. Claimed to be an industry first, NeuPro-M supports both SoC as well as heterogeneous SoC (HSoC) scalability to achieve up to 1,200 Terra operations per second (TOPS). It also offers optional robust secure boot and end-to-end data privacy.

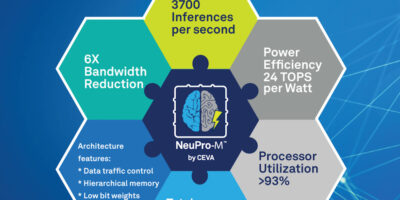

The NeuPro–M compliant processors initially include the NPM11 and NPM18 pre-configured cores. The NPM11 is a single NeuPro-M engine, offering up to 20 TOPS at 1.25GHz and the NPM18 has eight NeuPro-M engines, delivering up to 160 TOPS at 1.25GHz. A single NPM11 core, when processing a ResNet50 convolutional neural network, achieves a five-fold performance increase and six-fold memory bandwidth reduction versus its predecessor, reports the company. This results in 24 TOPS per Watt efficiency.

NeuPro-M is capable of processing all known neural network architectures, as well as integrated native support for next-generation networks like transformers, 3D convolution, self-attention and all types of recurrent neural networks. NeuPro-M has been optimised to process more than 250 neural networks, more than 450 AI kernels and more than 50 algorithms. The embedded vector processing unit (VPU) ensures future proof software-based support of new neural network topologies and AI workloads, claims the company. The CDNN offline compression tool can increase the frames per second per Watt of the NeuPro-M by a factor of five to 10 for common benchmarks, with minimal impact on accuracy, says Ceva.

The NeuPro-M heterogenic architecture is composed of function-specific co-processors and load balancing mechanisms. By distributing control functions to local controllers and implementing local memory resources in a hierarchical manner, the NeuPro-M achieves data flow flexibility that result in more than 90 per cent utilisation and protects against data starvation of the different co-processors and accelerators at any given time. The optimal load balancing is obtained by practicing various data flow schemes that are adopted to the specific network, the desired bandwidth, the available memory and the target performance, by the CDNN framework.

Architecture highlights include a main grid array consisting of 4K multiply and accumulates (MACs) with mixed precision of two to 16 bits, a Winograd transform engine for weights and activations, halving convolution time and allowing 8-bit convolution processing with less than 0.5 per cent precision degradation. A sparsity engine avoids operations with zero-value weights or activations per layer, for performance gain up to a factor of four, while reducing memory bandwidth and power consumption.

The programmable VPU handles unsupported neural network architectures with all data types, from 32-bit floating point down to 2-bit binary neural networks (BNN). The architecture also has configurable weight and data compression down to 2-bits while storing to memory, and real-time decompression upon reading, for reduced memory bandwidth.

The dynamically configured two level memory architecture is claimed to minimise power consumption attributed to data transfers to and from an external SDRAM.

Ran Snir, vice president and general manager of the Vision Business Unit at Ceva, observes: “The . . . processing requirements of edge AI and edge compute are growing at an incredible rate, as more and more data is generated and sensor-related software workloads continue to migrate to neural networks for better performance and efficiencies. With the power budget remaining the same for these devices, we need to find new and innovative methods of utilising AI at the edge. . . [NeuPro-M’s] distributed architecture and shared memory system controllers reduces bandwidth and latency to an absolute minimum . . . With the ability to connect multiple NeuPro-M compliant cores in a SoC or Chiplet to address the most demanding AI workloads, our customers can take their smart edge processor designs to the next level.”

For the automotive market, NeuPro-M cores and Ceva’s CEVA Deep Neural Network (CDNN) deep learning compiler and software toolkit comply with ISO26262 ASIL-B functional safety standard and meet IATF16949 and A-Spice standards.

NeuPro-M is available for licensing to lead customers today and for general licensing in Q2 2022.