Xilinx introduces Kria adaptive SOMs to add AI at the edge

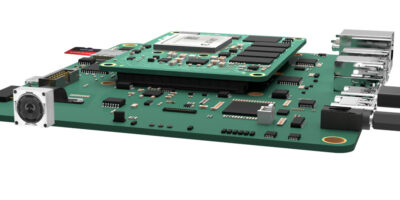

Adaptive system on modules (SOMs) from Xilinx are production-ready, small form factor embedded boards that enable rapid deployment in edge-based applications.The Kria adaptive SOMs can be coupled with a software stack and pre-built applications. According to Xilinx, the SOMs are a new method of bringing adaptive computing to AI and software developers.

The initial product to be made available is the Kria 26 SOM. It specifically targets vision AI applications in smart cities and smart factories.

By allowing developers to start at a more evolved point in the design cycle compared to chip-down design, Kria SOMs can reduce time-to-deployment by up to nine months, says Xilinx.

The Kria K26 SOM is built on top of the Zynq UltraScale+™ MPSoC architecture, which features a quad-core Arm Cortex A53 processor, more than 250 thousand logic cells, and a H.264/265 video codec. It has 4Gbyte of DDR4 memory and 245 I/Os, which allow it to adapt to “virtually any sensor or interface”. There is also 1.4TOPs of AI compute performance, sufficient to create vision AI applications with more than three times higher performance at lower latency and power compared to GPU-based SOMs. Target applications are smart vision systems, for example in security, traffic and city cameras, retail analytics, machine vision, and vision guided robotics.

Hardware is coupled with software for production-ready vision accelerated applications which eliminate all the FPGA hardware design work. Software developers can integrate custom AI models and application code. There is also the option to modify the vision pipeline using design environments, such as TensorFlow, Pytorch or Café frameworks, as well as C, C++, OpenCL, and Python programming languages. These are enabled by the Vitis unified software development platform and libraries, adds Xilinx.

The company has also opened an embedded app store for edge applications. There are apps for Kria SOMs from Xilinx and its ecosystem partners. Xilinx apps range from smart camera tracking and face detection to natural language processing with smart vision. They are open source and provided free of charge.

For further customisation and optimisation, embedded developers can draw on support for standard Yocto-based PetaLinux. There is also the first collaboration between Xilinx and Canonical to provide Ubuntu Linux support (the Linux distribution used by AI developers). Customers can develop in either environment and take either approach to production. Both environments will come pre-built with a software infrastructure and helpful utilities.

Finally, the Kria KV260 Vision AI starter kit is purpose-built to support accelerated vision applications available in the Xilinx App Store. The company claims developers can be “up and running in less than an hour with no knowledge of FPGAs or FPGA tools”. When a customer is ready to move to deployment, they can seamlessly transition to the Kria K26 production SOM, including commercial and industrial variants.

Xilinx has published an SOM roadmap with a range of products, from cost-optimised SOMs for size and cost-constrained applications to higher performance modules that will offer developers more real-time compute capability per Watt.

Kria K26 SOMs and the KV260 Vision AI Starter Kit are available now to order from Xilinx and its network of worldwide distributors. The KV260 Vision Starter Kit is available immediately, with the commercial-grade Kria K26 SOM shipping in May 2021 and the industrial-grade K26 SOM shipping this summer. Ubuntu Linux on Kria K26 SOMs is expected to be available in July 2021.