Nvidia prepares for AI infrastructure with Hopper architecture

At GTC 2022, Nvidia announced its next generation accelerated computing platform “to power the next wave of AI data centres”. The Hopper architecture succeeds the Ampere architecture, launched in 2020.

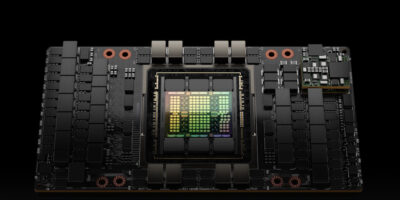

The company also announced its first Hopper-based GPU, the NVIDIA H100, equipped with 80 billion transistors. Described as the world’s largest and most powerful accelerator, the H100 has a Transformer Engine and a scalable NVLink interconnect for advancing gigantic AI language models, deep recommender systems, genomics and complex digital twins.

The Nvidia H100 GPU features major advances to accelerate AI, HPC, memory bandwidth, interconnect and communication, including nearly 5Tbytes per second of external connectivity. H100 is the first GPU to support PCIe Gen5 and the first to utilise HBM3, enabling 3Tbytes per second of memory bandwidth, claimed Nvidia.

According to Jensen Huang, CEO, 20 H100 GPUs can sustain the equivalent of the entire world’s internet traffic, making it possible for customers to deliver advanced recommender systems and large language models running inference on data in real time.

The Transformer Engine is built to speed up these networks as much as six times compared with the previous generation without losing accuracy.

MIG technology allows a single GPU to be partitioned into seven smaller, fully isolated instances to handle different types of jobs. The Hopper architecture extends MIG capabilities by up to a factor of seven over the previous generation by offering secure multi-tenant configurations in cloud environments across each GPU instance.

According to the company, H100 is the first accelerator with confidential computing capabilities to protect AI models and customer data while they are being processed. Customers can also apply confidential computing to federated learning for privacy-sensitive industries like healthcare and financial services, as well as on shared cloud infrastructures.

To accelerate the largest AI models, NVLink combines with an external NVLink Switch to extend NVLink as a scale-up network beyond the server, connecting up to 256 H100 GPUs at nine times higher bandwidth versus the previous generation using NVIDIA HDR Quantum InfiniBand.

New DPX instructions accelerate dynamic programming by up to 40 times compared with CPUs and up to a factor of seven compared with previous generation GPUs, said Nvidia. This includes, for example, the Floyd-Warshall algorithm to find optimal routes for autonomous robot fleets and the Smith-Waterman algorithm used in sequence alignment for DNA and protein classification and folding. Availability NVIDIA H100 will be available starting in Q3.